September 26, 2021

A new research pilot by Weizmann Institute scientists uses artificial intelligence to unravel the mysteries of colliding particles

“Our work is similar to inspecting the remains of a plane crash where we’re trying to reconstruct what colour pants the passenger from seat A17 was wearing,” said Jonathan Shlomi, a doctoral student from Professor Eilam Gross’s group from the Weizmann Institute of Science’s Particle Physics and Astrophysics Department.

Only instead of somewhere in the middle of the ocean or a stormy mountain top, Shlomi is really referring to the European Organization for Nuclear Research’s – known as CERN – renowned Large Hadron Collider (LHC), where colliding particles are likened to a crashing plane.

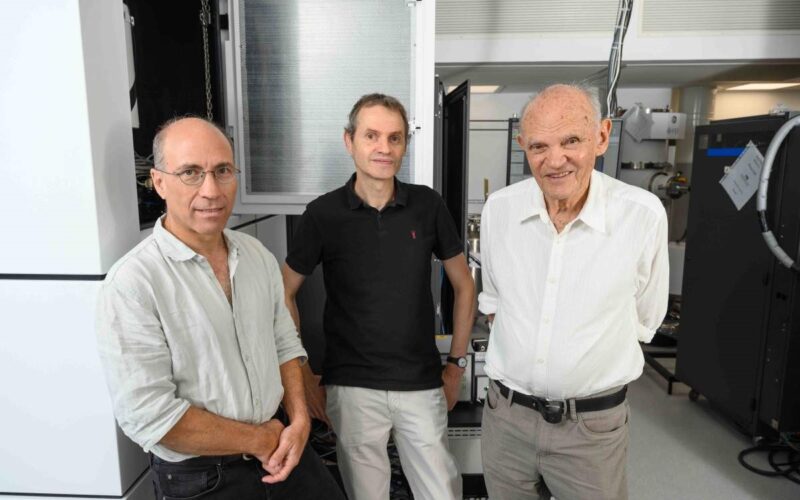

Gross is no stranger to CERN’s particle accelerator. Between the years 2011 and 2013 he led a team of investigators in search of the Higgs boson, using the ATLAS detector; his was one of two groups that announced the discovery of the elusive particle in July 2012. The discovery of the Higgs boson, often referred to as the

‘God particle’, marked the solution of a decades-old physical conundrum – how do particles gain mass?

According to the model predicted by Peter Higgs in the 1960s, this particle is the main culprit. However, the particle remained a theoretical entity until 2012, despite many attempts to detect it. Against all odds, the particle was discovered, the riddle was solved, and in 2014 Gross returned to Israel. But after solving one of the greatest questions of modern physics, what was left for Gross to study?

The obvious way forward in particle, or high energy, physics is the discovery of an unknown particle or any other novel feature of the subatomic world. After the ‘God particle’ was finally found, efforts at CERN focused on proving other theoretical models such as supersymmetry, according to which each Boson should have its fermion superpartner and vice versa. However, since these efforts reached a dead end, Gross came to the realization that it might be time to tread a new path: enhancing and fine tuning current data analysis methods in order to improve both the extraction of existing data and the efficacy by which new particles are searched for in both present and future accelerators.

To achieve this Gross established a new research group at the Weizmann Institute that focuses on solving major questions in particle physics using machine learning approaches. Shlomi was the first student to join the group, and he immediately undertook the mission to improve the analysis of the ATLAS detector’s dataset, which contains some 100 million components.

When particles collide inside the ATLAS detector, its components record energy measurements that scientists must then decipher. Taken together with the fact that there are over 1 billion collisions in any given second while the accelerator is running, this setup poses a two-headed problem: On the one hand, the staggering amount of data cannot be analysed manually; on the other hand, since these are fast-evolving events occurring on a microscopic scale, the number of the detector’s components – as large as that may be – is still unable to effectively collect all events at the same level of precision.

The first challenge that Gross’s team decided to tackle was improving the capacity to distinguish different types of quarks – elementary particles that can be detected when larger particles collide. There are currently six known types, or flavours, of quarks, each with its own distinct mass that determines the particular type of quark a Higgs boson will decay into following a collision. Thus, for example, the probability of observing a Higgs boson decaying into a bottom quark is very high – owing to its large mass – while up and down quarks are so light that they’re practically undetectable. The charm quark, which is neither too light nor too heavy, is difficult to identify on its own, as well as to distinguish from the bottom quark.

To address this difficulty, the research group developed a particle flow algorithm that analyses the movement and energy dispersal patterns of these particles in time and space. By supplying the algorithm with billions of simulated collision events, the scientists were able to demonstrate that a computer can be taught to identify and analyse the relevant data.

“We currently don’t have the means to build more sensitive detectors but since we understand the physics behind particle collisions, we can create high resolution simulations of these events in order to ask: How would the detector react if it were to have additional components that would enable more accurate measurements of the collisions?” said Professor Gross.

Now that Gross and his team have been able to provide a proof of concept for the algorithm that they developed, the next step forward will be to test it on larger datasets. “We understand today that in order to extract greater scientific value from the measurements carried out at the LHC, the sensitivity of our data analysis methods needs to be as high as possible,” said Shlomi.

“Accurate simulations are a valuable tool that could help us to maximize the method’s sensitivity.”

“I believe that the field of particle physics as a whole is heading in the direction of artificial intelligence – to an extent that no scientist in the future would even bother to mention that they rely on machine learning for their analysis, because it would be a given, a fundamental tool in our toolbox,” says Gross. “We’re not there yet, but when we do get to that place, sooner rather than later, the entire field will transform and we will witness a revolution in how high energy physics data is analysed.”

Professor Eilam Gross

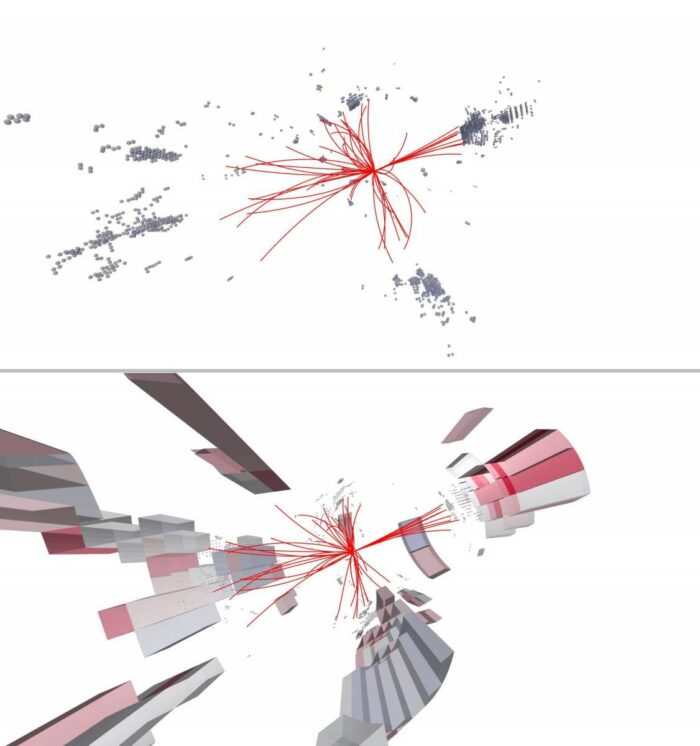

Using a simulation of the collision, as if it were detected by a more sensitive detector (top image), “teaches” the computer, by deep learning, to more efficiently and accurately analyse it (bottom image). The red lines indicate the particles’ route post-collision